TLDR

For basic use cases with text (summarize, extract, classify, etc), start with GPT-4o mini. It works really well, and the pricing is terrific: ~25x cheaper than GPT-4o.

For use cases with video, audio, images, or complex reasoning, use GPT-4o.

Overview

This post is for people (typically non-engineers) that want to use AI in their own workflows to extract information from pdfs, summarize emails, etc. If you're looking for detailed technical comparisons on latency, parameters, fine-tuning, etc you'll need to look elsewhere. But if you need to know the practicalities of using GPT-4o and GPT-4o mini in your day to day workflows, you came to the right place. First, here's the quick backstory:

OpenAI released GPT-4o (the 'o' stands for "omni") on May 13, 2024 as its new flagship model that can reason across audio, vision, and text. Broadly speaking, the announcement noted that GPT-4o matches the performance of GPT-4 turbo on English and code, while being much faster and 50% cheaper. GPT-4o outperforms previous models on vision and audio understanding.

Then, only two months later, OpenAI released GPT-4o mini on July 18, 2024 to much excitement. According to the launch announcement, it's "much more affordable than previous models", even being 60% cheaper than GPT-3.5 turbo. GPT-4o mini supports text and vision in the API, with support for text, image, video and audio inputs and outputs coming in the future.

Basics

| Model | GPT-4o | GPT-4o mini |

| Release Date | May 13, 2024 | July 18, 2024 |

| Knowledge Cutoff | October 2023 | October 2023 |

| Input Context Window | 128k Tokens | 128k Tokens |

| Maximum Output | 2k tokens | 16.4k tokens |

| Text | ✅ | ✅ |

| Vision | ✅ | ✅ |

| Voice | ✅ | ❌ |

Pricing

| Model | GPT-4o | GPT-4o mini |

| Input Token Cost | $5/ million tokens | $0.15/ million tokens |

| Output Token Cost | $15/ million tokens | $0.60/ million tokens |

If your takeaway is that GPT-4o mini is much, much cheaper than GPT-4o (33x cheaper for input tokens and 25x cheaper for output tokens), you're generally right!

The one caveat is that GPT-4o mini can use many more tokens for tasks involving images and vision inputs (sometimes 20x more), so be careful before assuming that GPT-4o mini will always be cheaper than GPT-4o. You'll need to test it on your real use case to get a more accurate cost profile!

Performance

| Model | GPT-4o | GPT-4o mini |

| MMLU (Language Understanding) | 88.7 | 82.0 |

| MMMU (Multi-modal understanding) | 69.1 | 59.4 |

According to all of the benchmarks, GPT-4o is "a bit" better than GPT-4o mini. But in practice, here's what we've observed:

- For most basic cases of extraction, summarization, and classification, they both do the job really well, so it's best to start with GPT-4o mini as the typically cheaper option.

- If GPT-4o mini isn't quite good enough for a basic task, try GPT-4o, and it will probably be able to do it.

- For anything that involves images, videos, audio, or complex reasoning, you're likely best off just starting with GPT-4o.

But it's always worth comparing them for your real use case on at least a few examples, and in the next section we'll show you to to do that.

How to Test GPT-4o vs. GPT-4o mini (the simple way)

Here's a tool to help you very quickly run a head to head comparison of the two models for the use case of extracting data from images or PDF documents. Simply paste in your sample document, and define what you would like extracted, and the tool will automatically run against both models and produce a side-by-side comparison of the outputs and cost.

GPT-4o vs GPT-4o Mini

Upload an image:

Fields to Extract:

If you want to test out a more complex use case, or do a more advanced test on many examples, reaad on.

How to Test GPT-4o vs. GPT-4o mini (the more advanced way)

Comparing single examples may not give you the full picture of how the model will perform for your use case. In this part of the tutorial, I'll show you how to quickly run a comparison of both quality and cost of GPT-4o and GPT-4o with multiple examples using Relay.app.

I'll share the results for my use case of parsing information out of invoices and you can use this same process for whatever your task may be, from summarizing long-form documents, to editing blog posts, to generating email text. Here's a quick video demo, and you can see the full step-by-step process with screenshots below.

How to set up the experiment

Step 1: Sign up for a free Relay.app account

Navigate to https://relay.app and click "Start for free" in the top right. You'll then be able to create a free Relay.app account using your Google or Microsoft log in information.

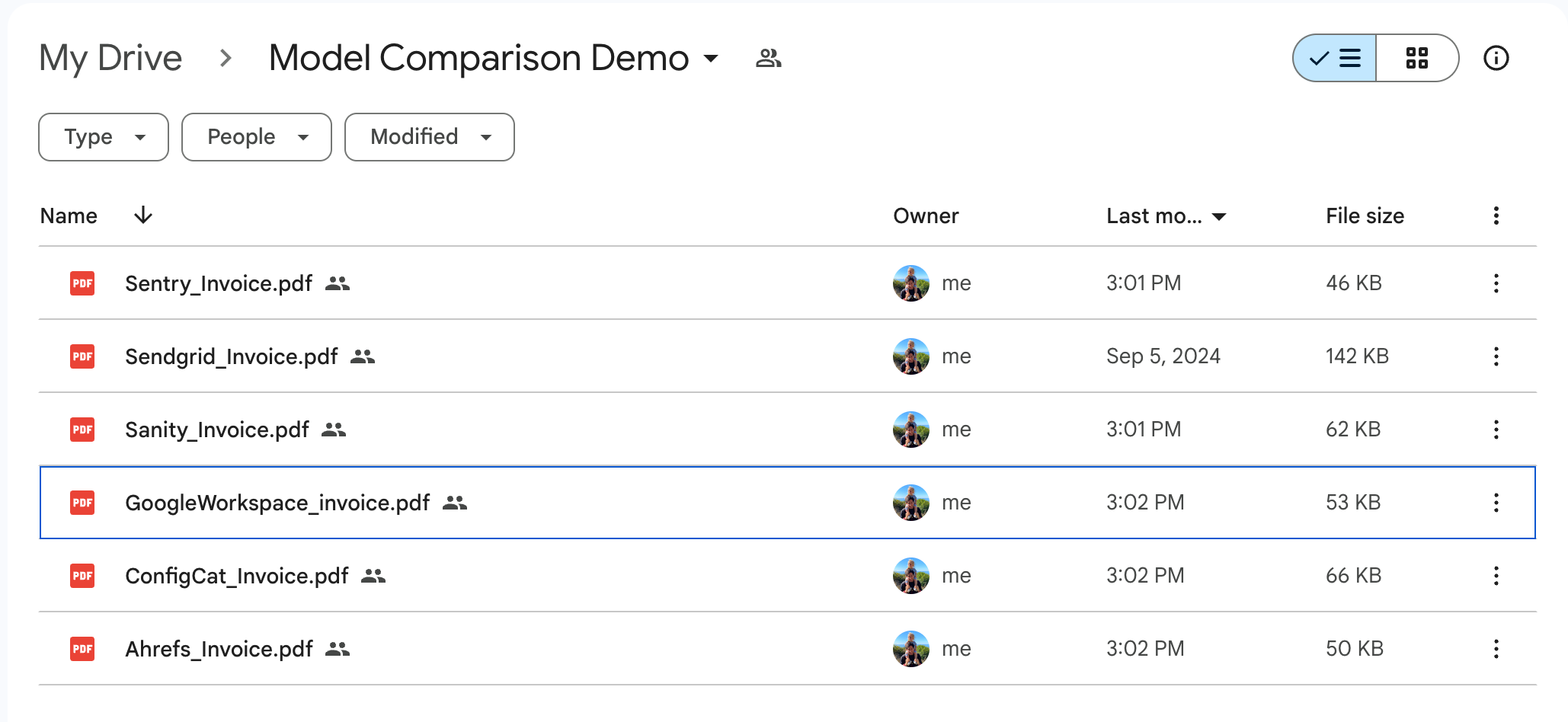

Step 2: Organize your testing data

Next you'll need to create a set of examples to test your workflows. In this case, I'll use a Drive folder of invoices I've received. In your case, it could be a labelled set of emails or spreadsheet rows.

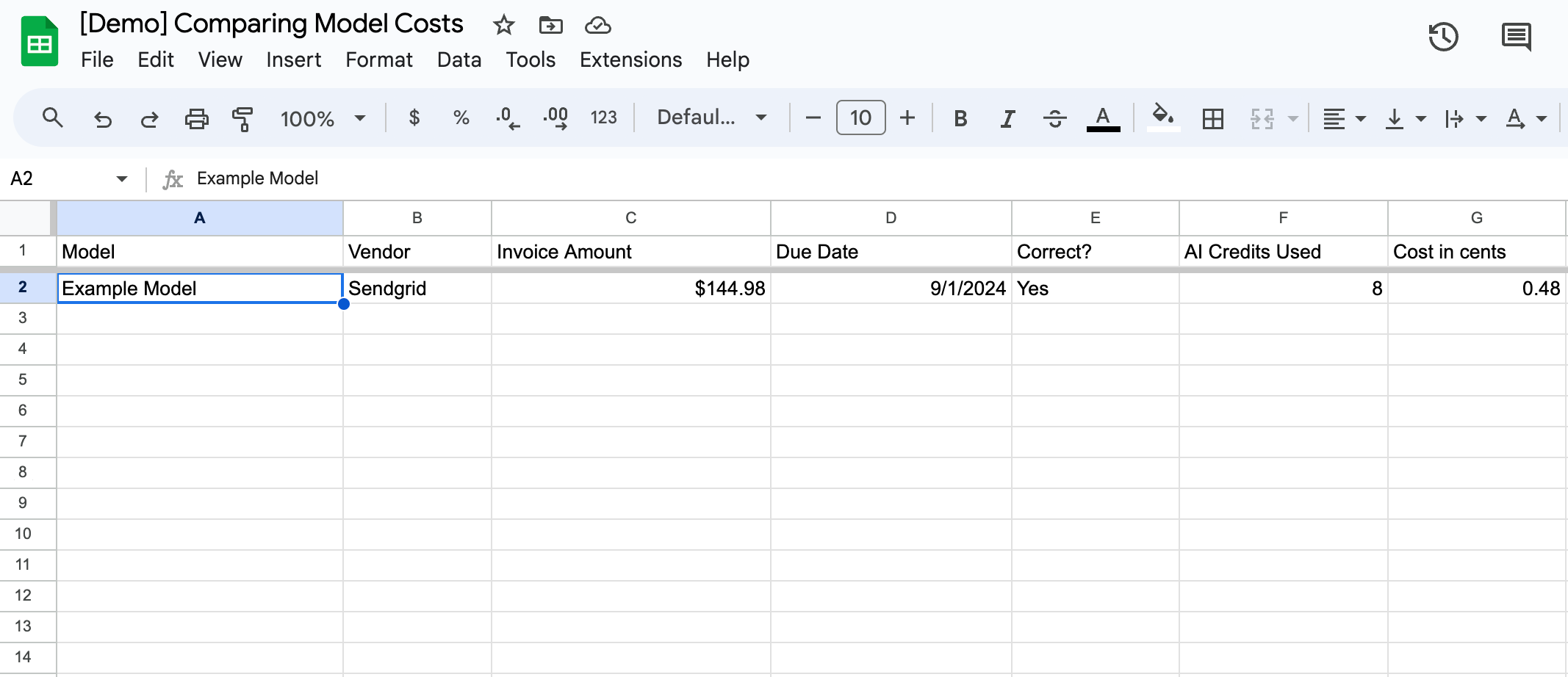

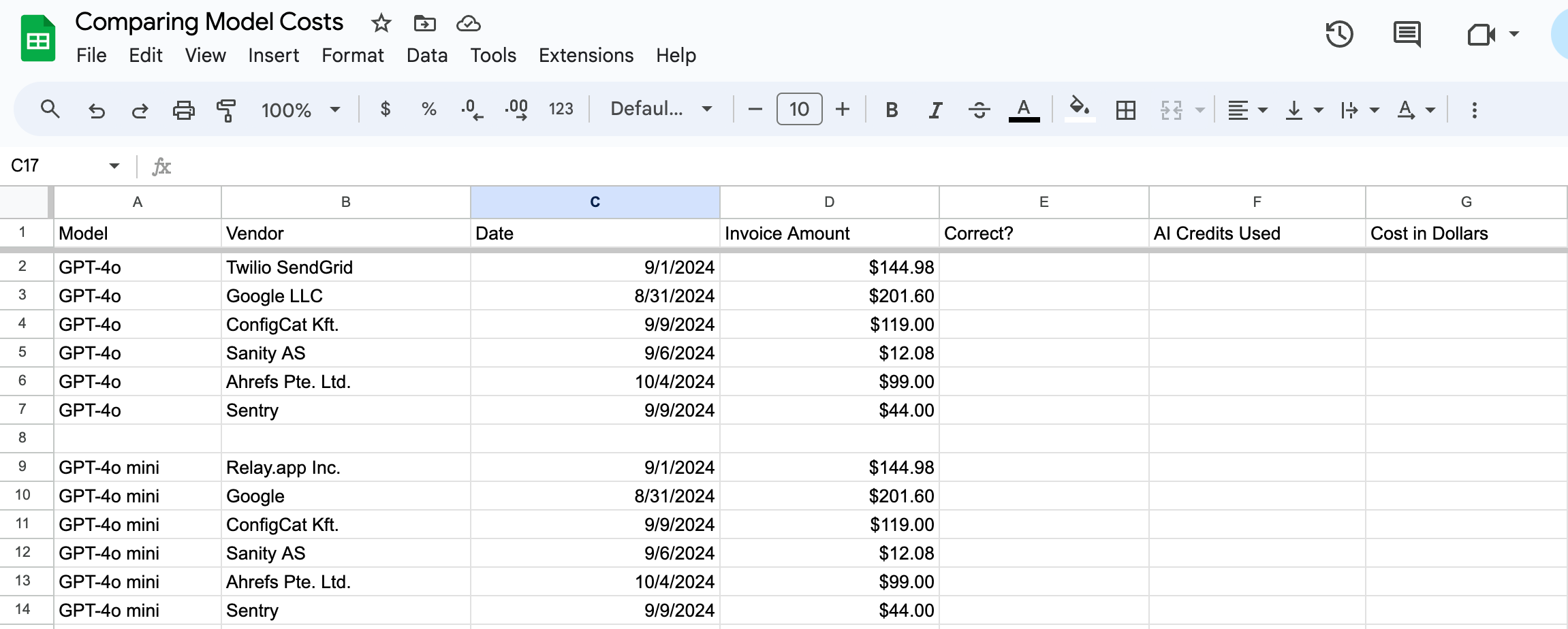

Step 3: Create your analysis Sheet

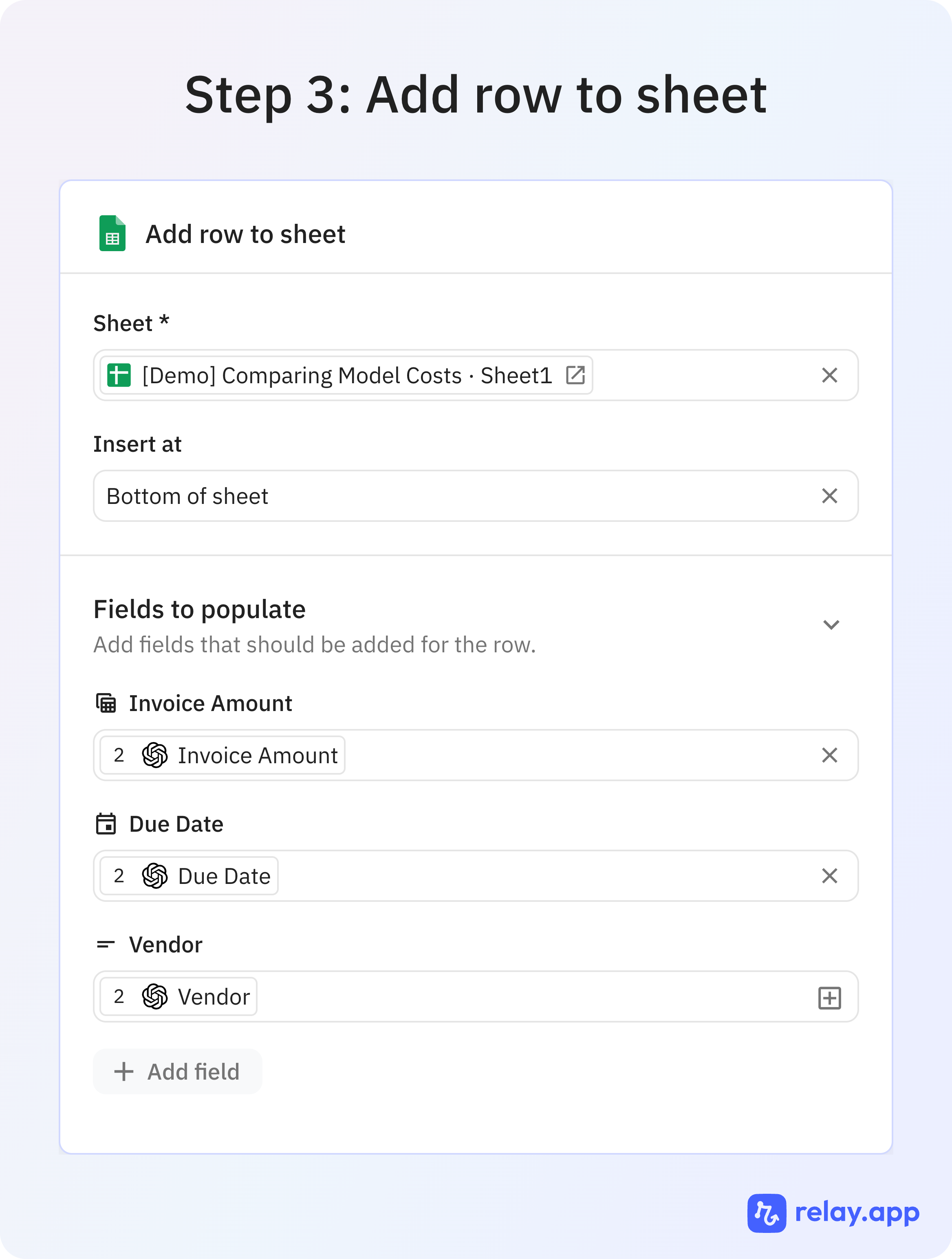

Next, create a simple spreadsheet to perform your analysis. You'll be writing a new row for each run of each model, so you should include columns for each item of output along with columns to check correctness and calculate cost.

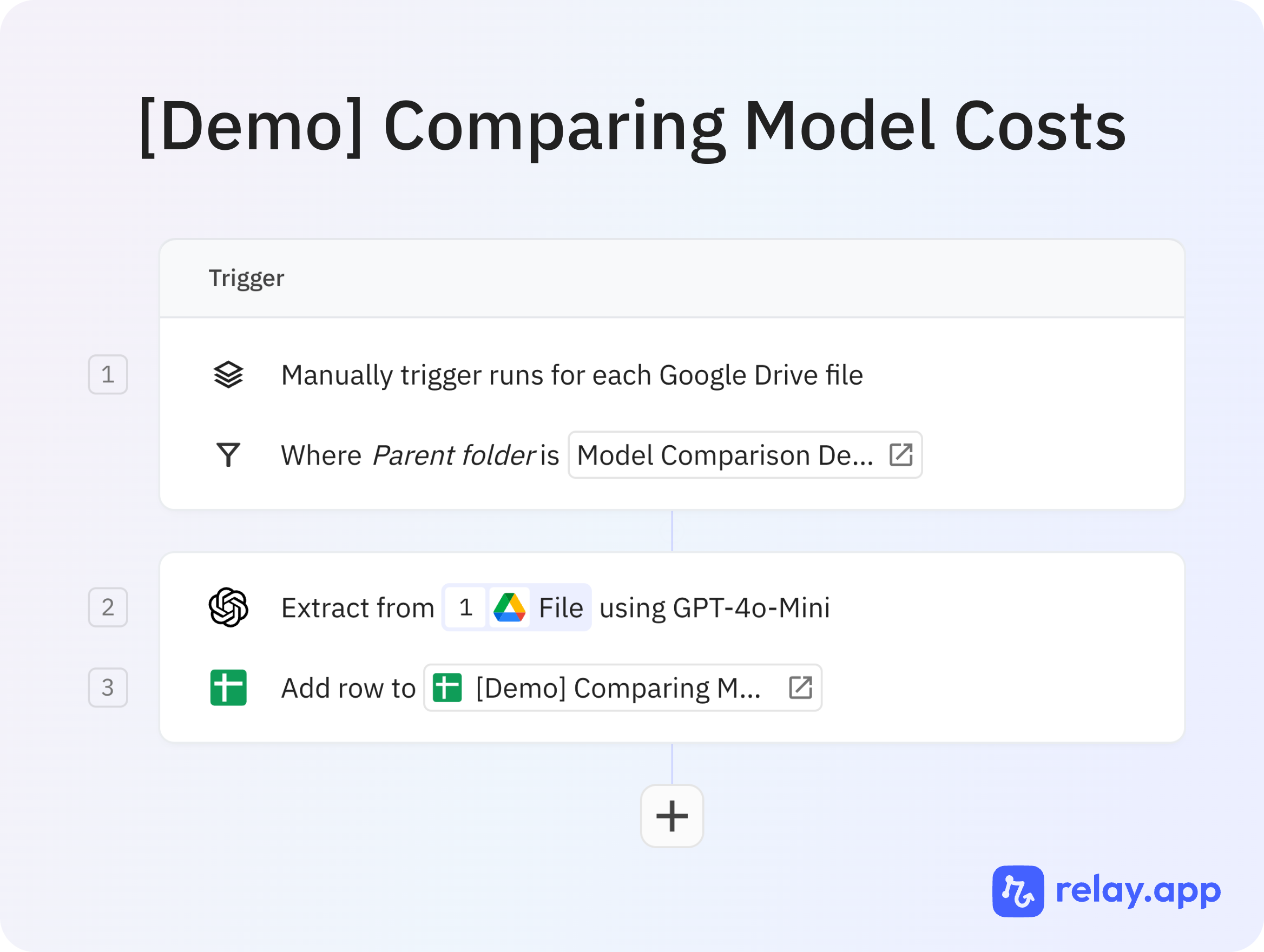

Step 4: Set up your Relay.app workflow

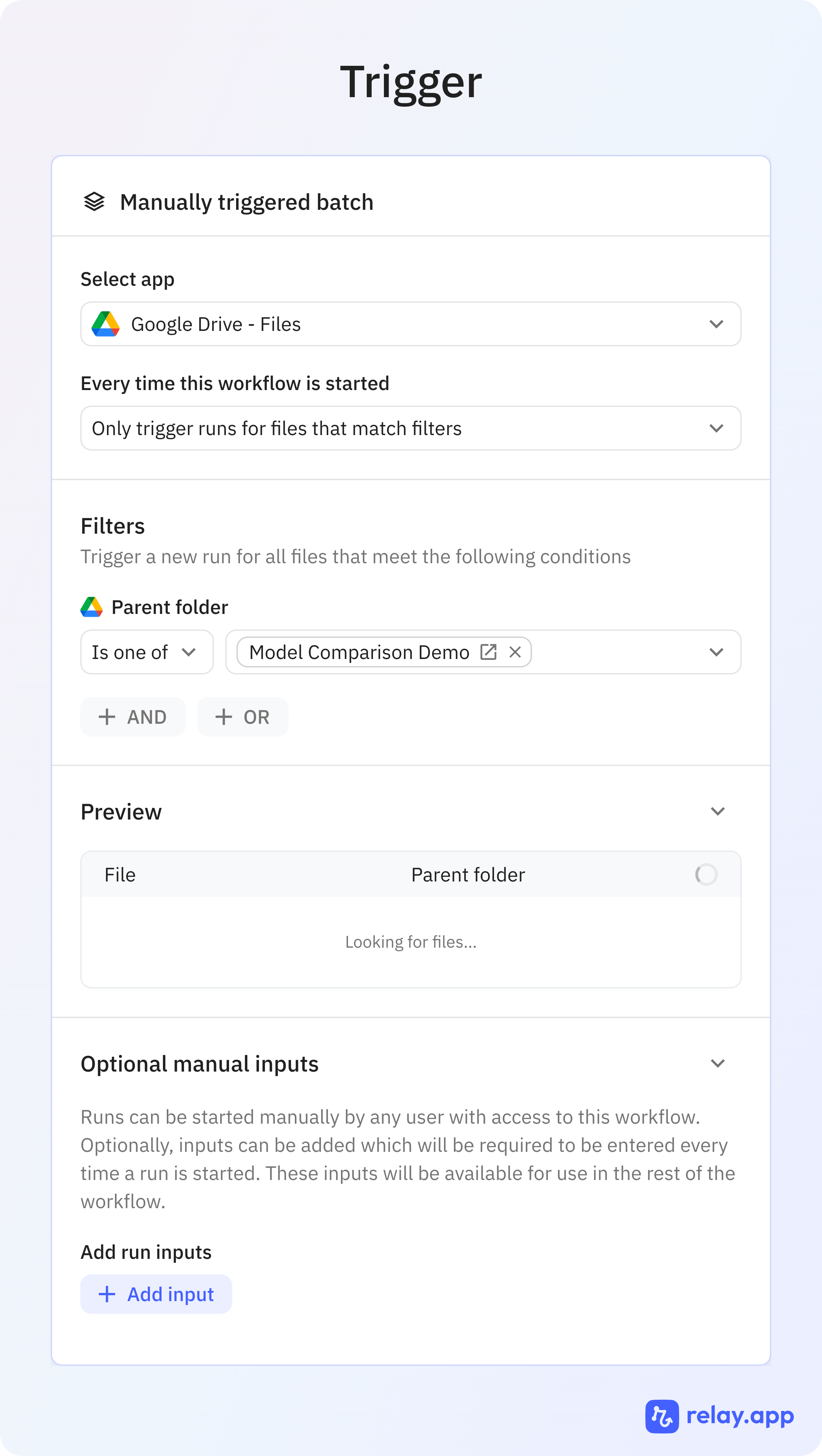

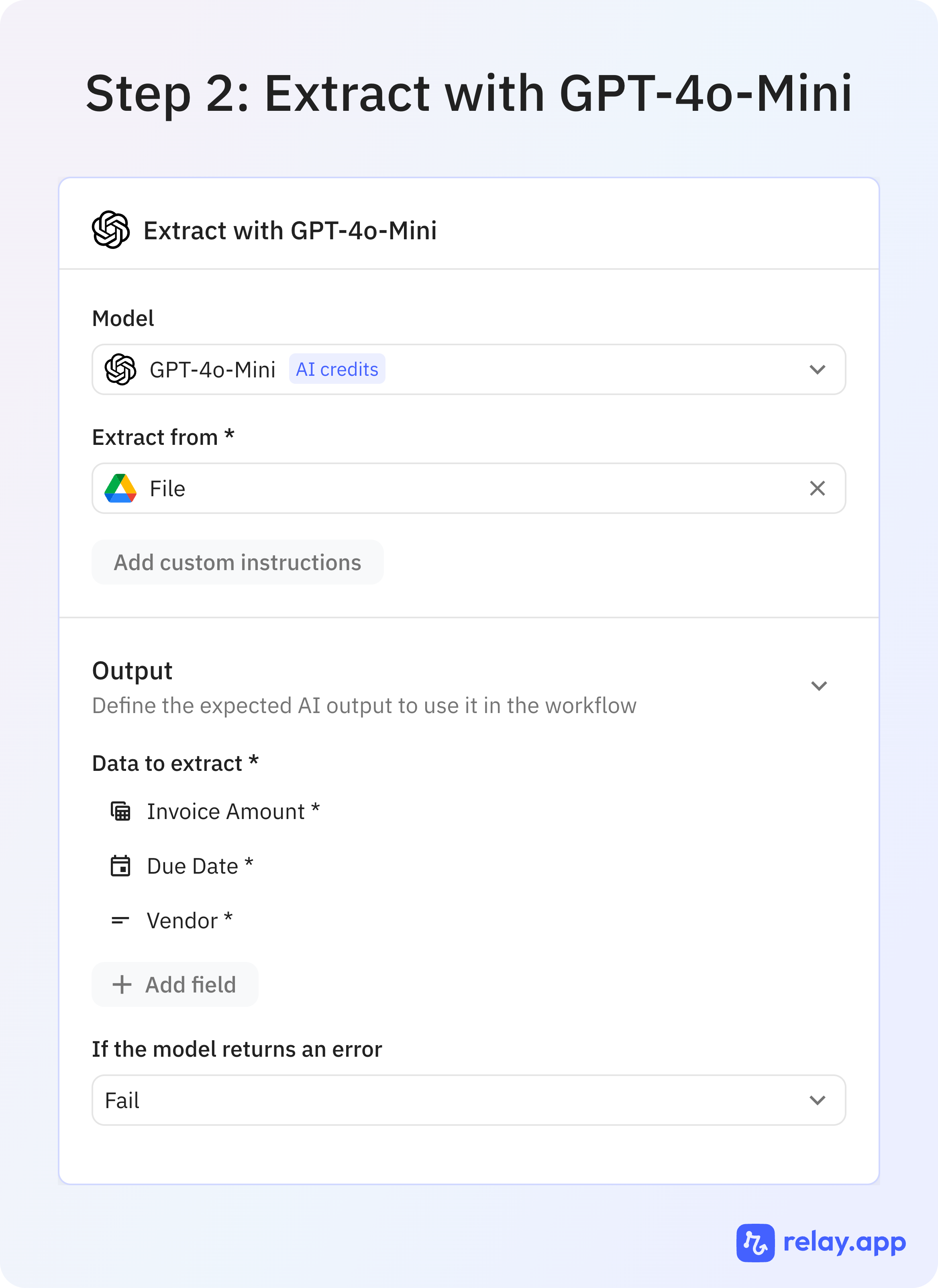

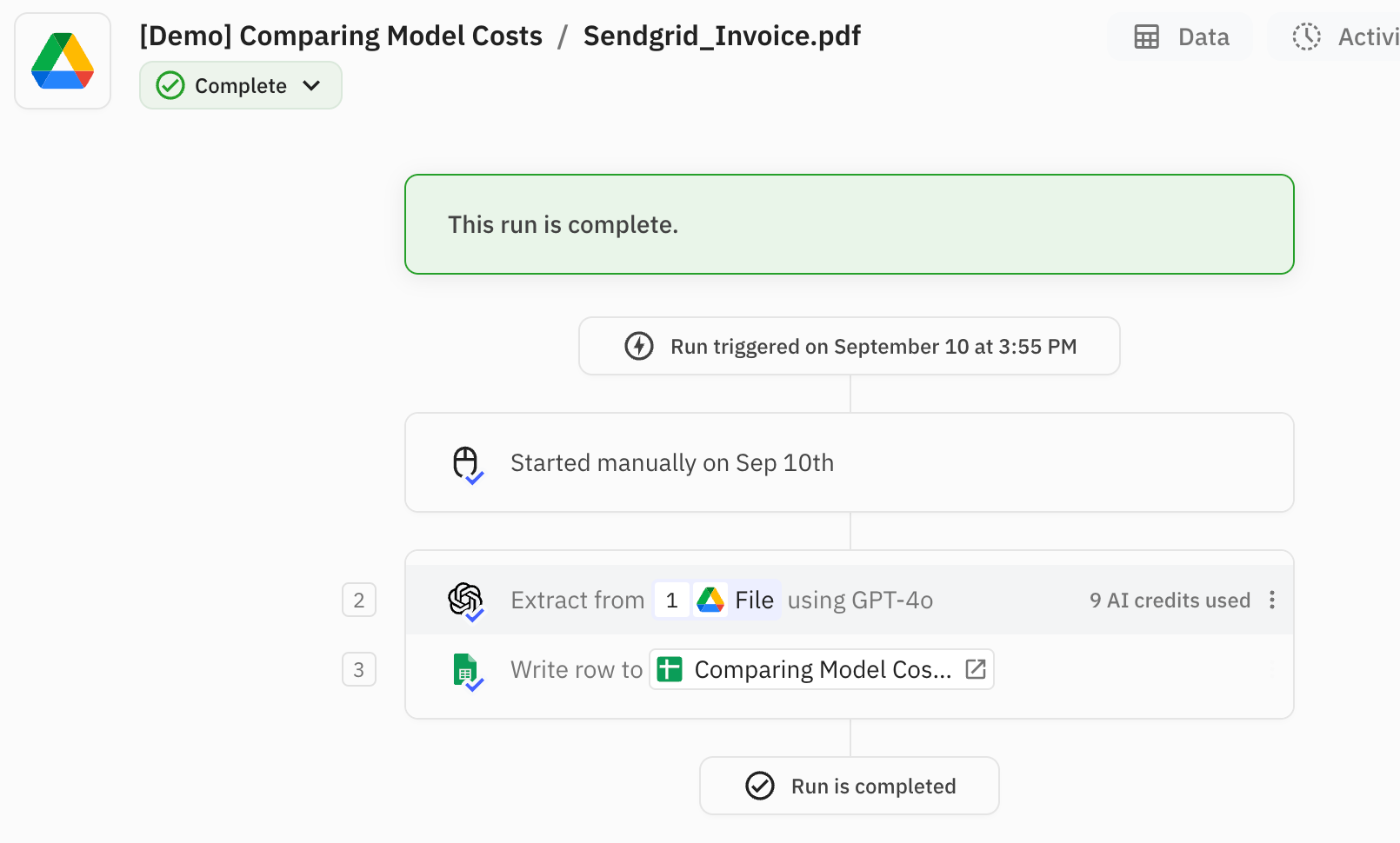

For your Relay.app workflow, you'll use a Manual Batch Trigger to run the workflow over each example in your test data. Your AI step will include any prompt you want and specify the output you want. Your final step will write the results to the spreadsheet.

Step 5: Run the Test

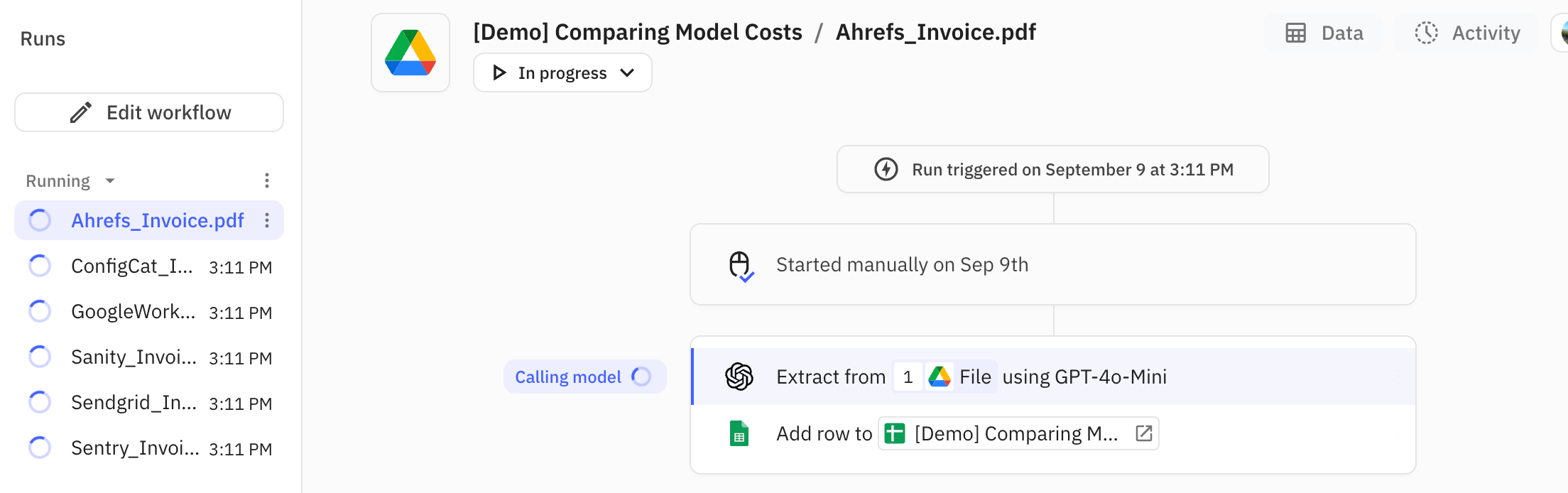

To run the test, click "Start a batch of runs".

Step 6: Analyze the Results

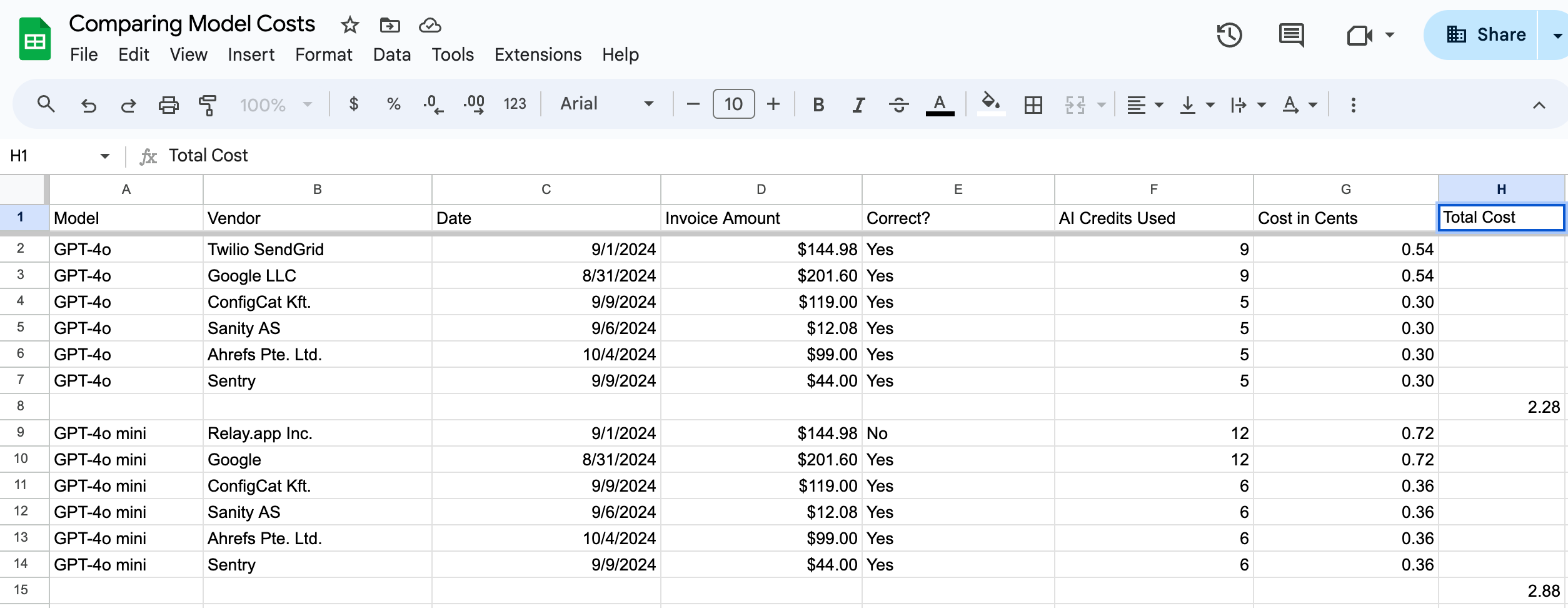

Next, it's time to grade the results! For each entry, market whether it's correct or now (or give it a numerical grade if it's not a binary task) and note the AI credit usage. To find the AI credit usage for a given run, navigate to the completed run view.

To compute the cost in cents, multiply the AI credits by 0.06.

As shown above, we have now produced an output spreadsheet with all of our examples analyzed and a cost comparison for each model for each example

Conclusion

In my use case, both GPT-4o and GPT-4o mini performed well. GPT-4o was perfect, and GPT-4o mini got the wrong vendor name for one invoice (but got all of the amounts and dates correct). Counterintuitively, GPT-4o was also 20% cheaper. So in my case, GPT-4o is the clear way to go: better performance at a better price. But I can't guarantee that will be the case for you.

So if you're wondering which model to use, comparing benchmarks and per token costs on paper, will only get you so far. The only way to really know is to test it for real, and Relay.app makes it really easy to do so in just a few minutes!